I think Benedict Evans writes about a lot of really interesting stuff. Sometimes he gets right to the hearts of things. Sometimes he’s wrong in important (and interesting!) ways.

This seems to me to be an example of the latter.

However, it often now seems that content moderation is a Sisyphean task, where we can certainly reduce the problem, but almost by definition cannot solve it. The internet is people: all of society is online now, and so all of society’s problems are expressed, amplified and channeled in new ways by the internet.

Fully agreed! Yes! Absolutely–technical problems are rarely just technical problems, but also social problems.

We can try to control that, but perhaps a certain level of bad behaviour on the internet and on social might just be inevitable, and we have to decide what we want, just as we did for cars or telephones - we require seat belts and safety standards, and speed limits, but don’t demand that cars be unable to exceed the speed limit.

This, however, does not follow, and it doesn’t follow even for cars. It took a lot of corporate manipulation of people’s beliefs for us to start thinking about car crashes as “accidents”. It took intense lobbying to create the crime of “jaywalking” where before, people had been allowed to walk in the streets their taxes paid for, and people driving cars had been responsible for not hitting others.

Powerful entities had it in their interest to make you believe this was all inevitable. People made a lot of money from making us think that this is all just How Things Are, that we have to accept the costs and deaths. They’re still making a lot of money. Even those seat belt laws exist because the auto lobby wanted to get out of having to build in airbags.

Automotive technology is technology just like the Internet is technology. Where technology lets us leap over natural physical limitations, “human nature” isn’t an inherent fundamental to the situation. Why did we build the cars to go fast? Why do people assume they should be able to get around faster in a car than on a bike, even around pedestrians? If I write a letter that tells you to kill yourself and have a print shop blow it up into a poster, is the print shop at all responsible for their involvement in my words? What if they put out a self-service photocopier and choose not to look at what people are using it for? Is it different if it’s not a poster but a banner ad? A tweet? Sure, we can acknowledge that it’s some part of human nature that we’re going to be shitty to each other, but should we be helping each other do it at 70 miles per hour? The speed of light? These are uncomfortably political questions, questions that have power tied up in them.

And that’s exactly why I think it’s important to reject Evans’ thinking here.

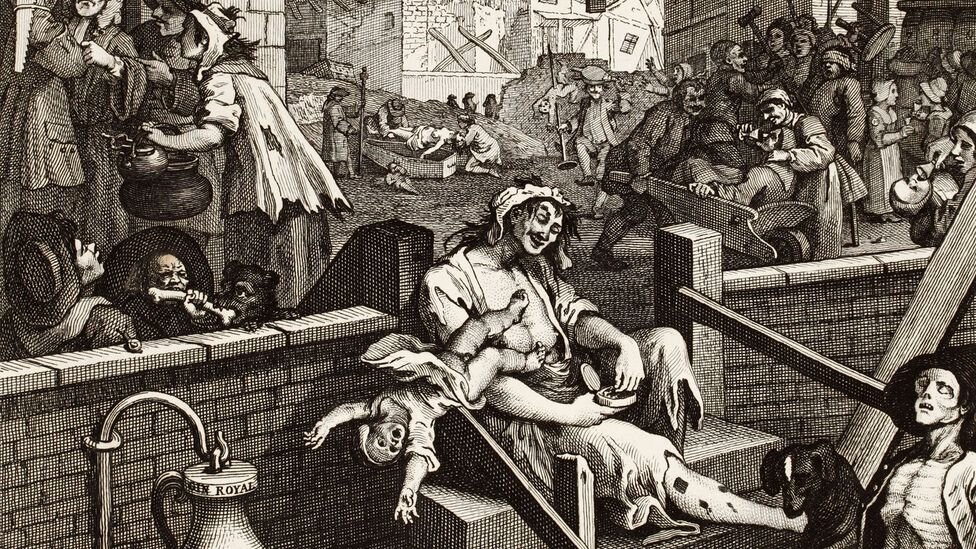

Some people argue that the problem is ads, or algorithmic feeds (both of which ideas I disagree with pretty strongly - I wrote about newsfeeds here), but this gets at the same underlying point: instead of looking for bad stuff, perhaps we should change the paths that bad stuff can abuse. The wave of anonymous messaging apps that appeared a few years ago exemplify this - it turned out that bullying was such an inherent effect of the basic concept that they all had to shut down. Hogarth contrasted dystopian Gin Lane with utopian Beer Street - alcohol is good, so long as it’s the right kind.

Of course, if the underlying problem is human nature, then you can still only channel it.

He does not argue in the linked piece that algorithmic newsfeeds are worth their bad effects, only that they’re a response to a real problem – that’s why I liked the linked piece!

Let’s not make fuzzy comparisons, even with tongue in cheek; Dickens was quite right to note that the “great vice” of “gin-drinking in England” arose out of “poverty”, “wretchedness[,] and dirt”, which are no more human nature than all the riches of Silicon Valley… and as a non-teetotaler I am free to add without fear of being thought a nag that any quantity of alcohol is bad for your health. There aren’t inherent inducements to good or evil in beer or gin. The existing context is too important, and someone’s getting rich off of selling you either.

I’m not even sure I believe that we can know anonymous messaging inherently leads to bullying, only that the populations who seize upon it in our preexisting imperfect context are using it toward that end.

But if you’re willing to believe that YikYak had to die, why then believe that an engagement-maximization framework – algorithms harvesting your eyeballs – is not having significant impact on the way we interact with each other? Is this guy invested in Facebook? Did any philosopher, pessimist or optimist, imagine like count displays in their state of nature?

Ah, blech, the guy’s got a history in VC. I shouldn’t have opened the Twitter to try to confirm pronouns. There’s a very sad genetic fallacy (well, heuristic) we could apply here but I’m too busy to let myself be saddened by its conclusions.