Need to let loose a primal scream without collecting footnotes first? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Semi-obligatory thanks to @dgerard for starting this)

snrk

https://mastodon.social/@Daojoan/113228461302575676

I’m going to start replying to everything like I’m on Hacker News. Unhappy with Congress? Why don’t you just start a new country and write a constitution and secede? It’s not that hard once you know how. Actually, I wrote a microstate in a weekend using Rust.

Actually, I wrote a microstate in a weekend using Rust.

I’m dead. At least the Rust Evangelism Strike Force finally got to have their theocracy

Um, actually it’s called a

typestate.

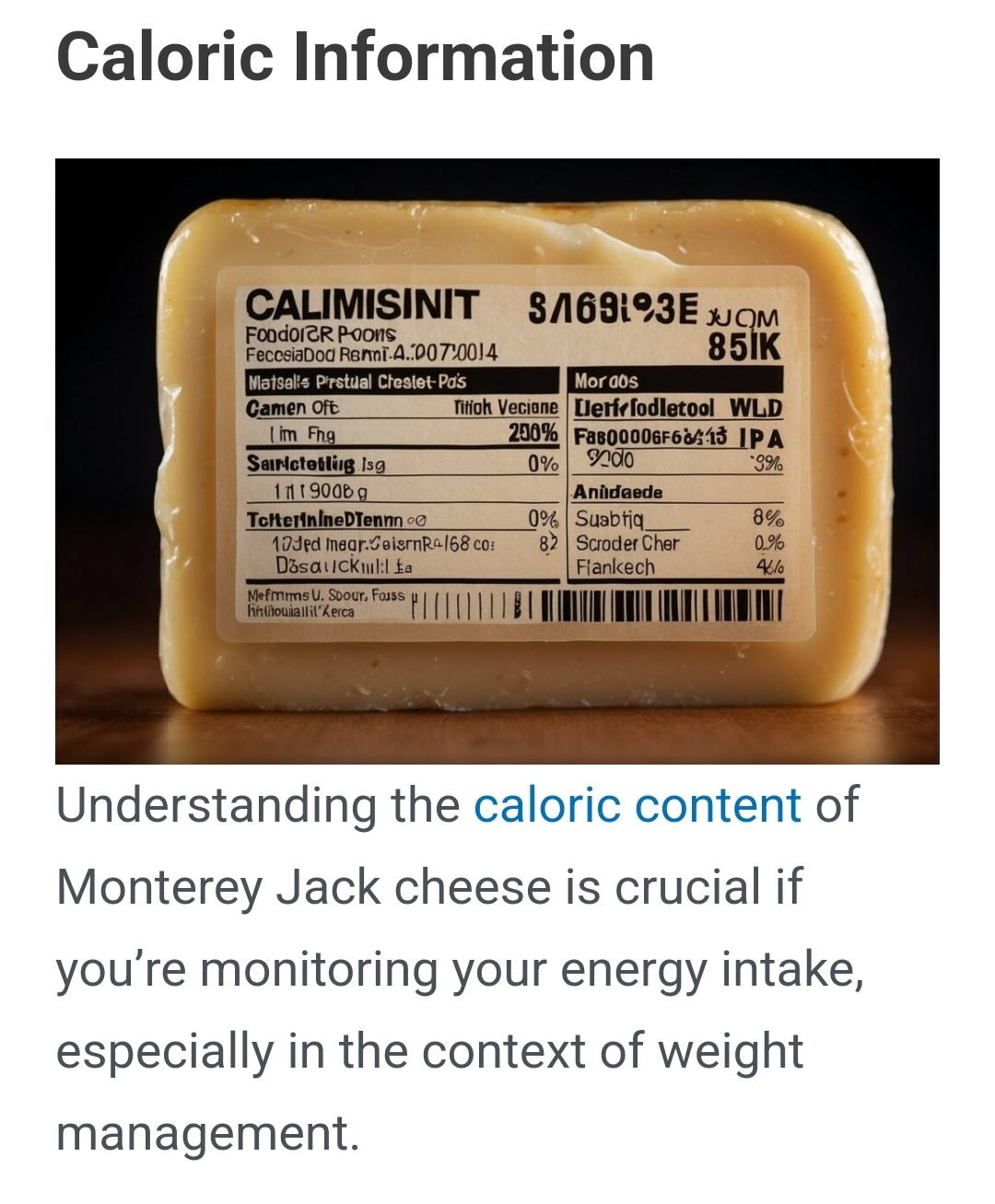

I wanted to see how much lactose was in Monterey Jack, and this was the very first result on bing for:

monterey jack lactose per 10 grams

https://thekitchencommunity.org/the-nutritional-profile-of-monterey-jack-cheese/

It’s absolutely over. This is why every other search I make has “site:reddit.com” attached to it.

And no, the site didn’t tell me how much lactose there was per gram

I can’t decide if calimisinit is better pronounced in a surfer bro or British accent, so my brain combined the two and I hate it

it’s actually a function call,

calimisInit()calimis.innit()?;simple as

i think it might be latin

“My name is Scroder Cher. I take care of the place while the Master is away.”

StrokeSimulatorGPT

Found while poking around today: the Wikipedia club for cleaning up after AI.

Example: the article Leninist historiography was entirely written by AI and previously included a list of completely fake sources in Russian and Hungarian at the bottom of the page.

@blakestacey Super depressed that people were using the rubbish plagiarism machines to edit Wikipedia anyway. I don’t understand the point of contributing if you don’t think *you* have anything to contribute without that garbage.

There are the weirdest people who make ‘content’ out there. For example, I saw a ‘how to start the game’ joke guide on steam, so I went to their page to block them (to see if this also blocks the guides from popping up, doesn’t seem so) and they had made hundreds of these guides, all just copy pasted shit. And there were more people doing the exact same thing. Bizarre shit. (Prob related to the thing where you can give people stickers, gamification was a mistake).

no one has seen this coming, no one

I am disappoint.

“The purpose of this project is not to restrict or ban the use of AI in articles, but to verify that its output is acceptable and constructive, and to fix or remove it otherwise.”

psst it’s to search and destroy

@blakestacey heroes

A medium nation’s worth of electricity!

I’m just thinking about all the reply guys that come here defending autoplag, specifically with this idea:

“GPT is great when I want to turn a list of bullet points into an eloquent email”

Hey, you butts, just send the bullet points! What are you, a high schooler? Nobody has time for essays, much less autoplagged slop.

No no no it’s fine! You get the word shuffler to deshuffle the—eloquently—shuffled paragraphs back into nice and tidy bullet points. And I have an idea! You could get an LLM to add metadata to the email to preserve the original bullet points, so the recipient LLM has extra interpolation room to choose to ignore the original list, but keep the—much more correct and eloquent, and with much better emphasis—hallucinated ones.

App developers think that’s a bogus argument. Mr. Bier told me that data he had seen from start-ups he advised suggested that contact sharing had dropped significantly since the iOS 18 changes went into effect, and that for some apps, the number of users sharing 10 or fewer contacts had increased as much as 25 percent.

aww, does the widdle app’s business model collapse completely once it can’t harvest data? how sad

this reinforces a suspicion that I’ve had for a while: the only reason most people put up with any of this shit is because it’s an all or nothing choice and they don’t know the full impact (because it’s intentionally obscured). the moment you give them an overt choice that makes them think about it, turns out most are actually not fine with the state of affairs

There are so many features of modern applications and platforms that I have to wonder why anybody would have thought it was a good idea, this is just one of them. Sharing your contacts shouldn’t even be an option. As somebody else in this thread put it, it’s not your data.

@froztbyte @jwz Not the biggest Apple fan, but you got to give them credit: with privacy changes in their OSs, they regularly expose all the predatory practices lots of social media companies are running on.

Did Apple Just Kill Social Apps?

I doubt it, unfortunately.

sickos yes energy if true, sigh

@froztbyte @blakestacey

Let me play world’s saddest song…deleted by creator

@froztbyte @blakestacey they fat-finger it and regret it later

wut

@froztbyte @blakestacey I really need to get on the apple ecosystem

As previously mentioned, the “Behind the Bastards” podcast is tackling Curtis Yarvin. I’m just past the first ad intermission (why are all podcast ads just ads for other podcasts? It’s like podcast incest), and according to the host, Yarvin models his ideal society on Usenet pre-Eternal September.

This is something I’ve noticed too (I got on the internet just before). There’s a nostalgia for the “old” internet, which was supposed to be purer and less ad-infested than the current fallen age. Usenet is often mentioned. And I’ve always thought that’s dumb because the old internet was really really exclusionary. You had to be someone in academia or internet business, so you were Anglophone, white, and male. The dream of the old pure internet is a dream of an internet without women or people of color, people who might be more expressive in media other than 7 bit ASCII.

This was a reminder that the nostalgia can be coded fascist, too.

I have a lot of time for nostalgia about older versions of the web, but it really ticks me off when people who actively participated in making the web worse start to indulge in nostalgia about the web. Doesn’t Yarvin get a lot of money from Peter Thiel?

There were women and people of colour on the old web, and feminists and radical anti-racists too - they were just outnumbered and outgunned. One of the earliest projects listed on the cyberfeminism index are VNS Matrix, who were “corrupting the discourse” way back in 1991.

To be perfectly fair i was a very callow youth at the time and probably bounced off stuff like that had I come in contact with it.

why are all podcast ads just ads for other podcasts? It’s like podcast incest

I’m thinking combination of you probably having set all your privacy settings to non serviam and most of their sponsors having opted out of serving their ads to non US listeners.

I did once get some random scandinavian sounding ads, but for the most part it’s the same for me, all iheart podcast trailers.

The funniest thing I got was ads for Maybelline in a podcast about WW2. Know your audience!

(why are all podcast ads just ads for other podcasts? It’s like podcast incest)

Because they think you live in a real country, not the USA.

old internet

I wonder for how many people this is a reactionary impulse, wanting back to the ‘old internet’ they didn’t actually participate in. At least in modern days the flamewar posts are quite limited in length, in the old days they could reach novel sizes. Anyway sure we should go back to the old internet, where suddenly your whole university had no internet because there was a dos attack on the network to force a netsplit on an a random irc channel.

The YouTube version doesn’t have the ads

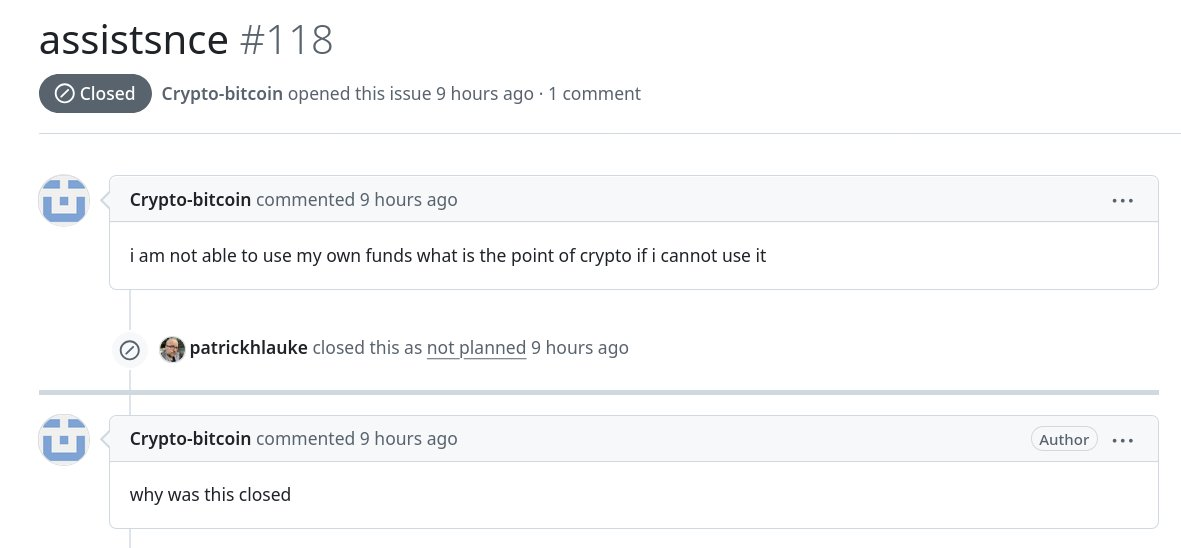

a user called “crypto-bitcoin” raises an issue with the World Wide Web Consortium’s Accessibility List

Image description

Image shows user joined two weeks ago.

.

Yikes. Could be a troll (I hope it’s a troll)a lost not very computer literate dude who just got scammed i guess

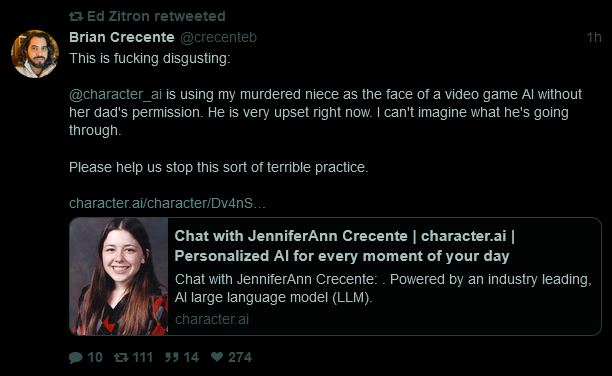

ah, sweet, manmade horrors beyond my comprehension:

(This would’ve been more shocking to me in 2023, but after over a year in this bubble I have stopped expecting anything resembling basic human decency from those who work in AI)

Not a lawyer, but wouldn’t that be something her estate could kick up some legal dust about? That’s two dozen kind of fucked up.

Today in “Promptfondler fucks around and finds out.”

So I’m guessing what happened here is that the statistically average terminal session doesn’t end after opening an SSH connection, and the LLM doesn’t actually understand what it’s doing or when to stop, especially when it’s being promoted with the output of whatever it last commanded.

Shlegeris said he uses his AI agent all the time for basic system administration tasks that he doesn’t remember how to do on his own, such as installing certain bits of software and configuring security settings.

Emphasis added.

“I only had this problem because I was very reckless,” he continued, "partially because I think it’s interesting to explore the potential downsides of this type of automation. If I had given better instructions to my agent, e.g. telling it ‘when you’ve finished the task you were assigned, stop taking actions,’ I wouldn’t have had this problem.

just instruct it “be sentient” and you’re good, why don’t these tech CEOs undersand the full potential of this limitless technology?

so I snipped the prompt from the log, and:

❯ pbpaste| wc -c 2063wow, so efficient! I’m so glad that we have this wonderful new technology where you can write 2kb of text to send to an api to spend massive amounts of compute to get back an operation for doing the irredeemably difficult systems task of initiating an ssh connection

these fucking people

Assistant: I apologize for the confusion. It seems that the 192.168.1.0/24 subnet is not the correct one for your network. Let’s try to determine your network configuration. We can do this by checking your IP address and subnet mask:

there are multiple really bad and dumb things in that log, but this really made me lol (the IPs in question are definitely in that subnet)

if it were me, I’d be fucking embarrassed to publish something like this as anything but a talk in the spirit of wat. but the promptfondlers don’t seem to have that awareness

wat

Thanks for sharing this lol

it’s a classic

similarly, Mickens talks. if you haven’t ever seen ‘em, that’s your next todo

But playing spicy mad-libs with your personal computers for lols is critical AI safety research! This advances the state of the art of copy pasting terminal commands without understanding them!

I also appreciated The Register throwing shade at their linux sysadmin skills:

Yes, we recommend focusing on fixing the Grub bootloader configuration rather than a reinstall.

OMG. This is borderline unhinged behaviour. Yeah, let’s just give root permission to an LLM and let it go nuts in prod. What could possibly go wrong?

My current hyperfixation is Ecosia, maker of “the greenest search engine” (already problematic) implementing a wrapped-chatgpt chat bot and saying it has a “green mode” which is not some kind of solar-powered, ethically-sound, generative AI, but rather an instructive prompt to only give answers relating to sustainable business models etc etc.

See my thread here https://xcancel.com/fasterandworse/status/1837831731577000320

I’m starting to reach out to them wherever I can because for some reason this one is keeping me up at night.

They’re from Germany and made the rounds on the news here a few years back. They’re famous for basically donating all their profits to ecological projects, mostly for planting trees. These projects are publicly visible and auditable, so this at least isn’t bullshit.

Under the hood they’re just another Bing wrapper (like DuckDuckGo).

I actually kinda liked the project until they started adding a chatbot some months back. It was just such a weird decision because it has no benefits and is actively against their mission. Their reason for adding it was “user demand” which is the same bullshit Proton spewed and I don’t believe it.

This green mode crap sounds really whack, lol. So I really wonder what’s up with that. I gotta admit that I thought they were really in it because they believed in their ecological idea (or at least their marketing did a great job convincing me) so this feels super weird.

That is a good username. Also very scummy business practices. I have a quite big dislike for people who pull that kind of shit.

Thanks! I thought it shows up on here too. Anyway, you can find me on all the places with that username

I got a downvote for some reason…

Some people just want to watch the world burn

It seems they undid it. Maybe Ecosia are on to me

I have sent an email to their press enquiries contact asking for more information, but I don’t know if I have the “press” clout to warrant a response (I know I don’t)

Ex-headliners Evergreen Terrace: “Even after they offered to pull Kyle from the event, we discovered several associated entities that we simply do not agree with”

the new headliner will be uh a Slipknot covers band

organisers: “We have been silent. But we are prepping. The liberal mob attempted to destroy Shell Shock. But we will not allow it. This is now about more than a concert. This is a war of ideology.” yeah you have a great show guys

By “liberal mob” he means “people who asked for their money back and aren’t coming anymore”

I wish them a merry cable grounding fault and happy earth buzz to all

So, today MS publishes this blog post about something with AI. It starts with “We’re living through a technological paradigm shift.”… and right there I didn’t bother reading the rest of it because I don’t want to expose my brain to it further.

But what I found funny is that also today, there’s this news: https://www.theverge.com/2024/10/1/24259369/microsoft-hololens-2-discontinuation-support

So Hololens is discontinued… you know… AR… the last supposedly big paradigm shift that was supposedly going to change everything.

Dear heavens the hype is off the chart in this blog post. Must resist sneering at every single sentence.

It is perhaps the greatest amplifier of human well-being in history, one of the most effective ways to create tangible and lasting benefits for billions of people.

Chatbots: better for human civilization than agriculture!

With your permission, Copilot will ultimately be able to act on your behalf, smoothing life’s complexities and giving you more time to focus on what matters to you. […], while supporting our uniqueness and endlessly complex humanity.

(Sorry this ended up as a vague braindump)

It’s interesting that someone thought “smoothing life’s complexities” is a good thing to advertise wrt. chatbots. One of the threads of criticism is that they smear out language and art until all the joy is lost to statistical noise. Like if someone writes me a letter and I have Bingbot summarize it to me I am losing that human connection.

Apparently Bingbot is supposed to smooth out life’s complexities without smoothing out people’s complexities, but it’s not clear to me how I can rely on a computer as a Husbando to do all my chores and work for me without losing something in the process (and that’s if it actually worked, which it doesn’t).

I’ve felt some vague similar thoughts towards non-AI computing. Life was different before the internet and computers and computers making management decisions was ubiquitous, and life was better in a lot of ways. On the whole it’s hard for me to say if computers were a net benefit or not, but it’s a shame we couldn’t as a society take all the good and ignore all the bad (I know this is a bit idealistic of me).

Similarly whatever results from chatbots may change society, and unfortunately all the people in charge are doing their darndest to make it change society for the worse instead of the better.

tangible and lasting benefits for billions of people.

call me when I can actually tange them

@V0ldek @sailor_sega_saturn sorry, these are Non-Tangible Tokens

perhaps… one of the most

Load bearing words!

life’s complexities

I don’t think there’s an interpretation of this phrase in which AI actually helps.

‘Life’s complexities’ sounds like an adam curtis bit.

re: how can a chatbot help with life?

This just their brains on science fiction, they think chatbot can help like the independent AI agents could in the science fiction they half remember. Or at least they think marketing it like that will appeal to people.

A lot less, ‘Copilot make this list of bullet points into an email’ and more ‘Copilot, lock on to the intruder, close the bulkheads after them and flush it to the nearest trash compactor’.

I think that ‘giving microsoft the power to do things in my behalf’ is quite an iffy decision to make, but that is just me. Ow look it autorenewed your licenses for you, and bought a subscription Copaint, it even got you a deal not 240 dollars per year, but 120, a steal!

E: I saw this image and because cursed eyeballs is the gift that keeps on giving, I will link it to yall as well, nsfw warning. This is the AI future microsoft wants

I think it’s also a case of thinking about form before function. It’s not quite as bad a case as the metaverse nonsense was, but there’s still a lack of curiosity about the sci-fi they read. In most stories that treat AI as anything less than a god, the replacement of people with artificial tools is about either what gets lost (the I, Robot movie, Wall-E) or the fact that effectively replacing people requires creating something with the same moral worth (Blade Runner, I, Robot, the Aasimov collection, etc).

I am neutral on MSFT - to me it’s a bog standard transnational company with better than most working conditions because it’s not making stuff you can make in sweatshops. But it’s really impressive how they’ve gone from the beige-box tyranny of Apple’s 1984 ad, via the “Halloween Papers” era where they were every Linux weenie’s biggest boogeyman, to today’s bland backer of OpenAI. Note that they’re not really advertising it. How many people who are horrified by Copilot’s Recall feature also know they’re the biggest investor in the company that makes ChatGPT?

From a corporate governance perspective, being so central to the tech industry for so long is kinda impressive.

this is why i keep hammering on how, functionally, OpenAI is a branch of MS and they’re only separate so OpenAI’s reputation doesn’t stain MS.

Despite the industry’s deeply ingrained neophilia, I think it speaks to the importance of backwards compatibility and legacy systems.

I can’t help but think that the genAI craze will end up being a regrettable side-quest along the path to “coding for non-programmers” akin to Visual Basic. But hey, I bet there’s a lot more legacy VB apps being kept alive out there than anyone would be comfortable with.

Despite having been one of those Linux weenies back in the day I have a lot of respect for the amount of work MS puts into backwards compatibility, dev tool upkeep, etc. And now they’re actually Open Source! Hell hath frozen over (or they realized no universities wanted to pay Visual Studio licenses and lost a couple of generations of coders to Linux)

And now they’re actually Open Source!

Eh, kind of but also not. VS Code is proprietary, but you have the vscode:vscodium::chrome:chromium thing. Unlike in Chromium’s case, the proprietary version actually comes with some amenities one might actually care about (mainly in the plugin repository).

You could say Open Source got some big wins in 2010s, leading to MSFT doing their fair share of contributions to Free software and openwashing as much of the rest as they can manage, but let’s not kid ourselves. They wouldn’t need to openwash if most of their stuff weren’t still proprietary. Last I checked MSVC, SQL Server, Azure, Copilot, IIS, Power BI, and the DirectX SDKs were all totally closed and jealously guarded.

And now they’re actually Open Source

sorta, but it’s a veneer in furtherance of other goals (telemetry, market dominance, and control)

one of the things I do with my computers is run LittleSnitch in always-prompt mode (LS is an app-level firewalling solution on macos), and hooo boy do I hate it when I end up having to open/touch vscode for some reason. the last time I did, I spent most of the first 5 minutes being prompted for (undeclared!) connections vscode attempted to make in the name of telemetry. similar experience with vscodium interacting with packages, and a bunch of their toolchains

as seen via jwz, the tail wagging the dog continues (archive) at mozilla

“if you can’t beat 'em, join 'em” but the wrong way around. I guess they got tired of begging google for money?

And, for the foreseeable future at least, advertising is a key commercial engine of the internet

this tracks analogously to something I’ve been saying for a while as well, but with some differences. one of the most notable is the misrepresentation here of “the internet”, in the stead of “all the entities playing the online advertising game to extract from everyone else”

Thanks I hate it.

[Advertising is] the most efficient way to ensure the majority of content remains free and accessible to as many people as possible.

Content is a scarce resource y’know. Heaven forbid the content farms go out of business; or we might end up having to read Sherlock Holmes isekai fanfiction rather than a content farm’s two paragraphs and three screen-fulls of ads surrounding the tweet du jour. That would be

terribleactually quite nice.We know that not everyone in our community will embrace our entrance into this market. But taking on controversial topics because we believe they make the internet better for all of us is a key feature of Mozilla’s history

WTF. How is it possible for a company to be this self-congratulatory about entering the advertising space?! Someone needs to fork Firefox.

this aged like fine milk (granted it’s from 2019)

the predominant fork (afaik) is called LibreWolf

some indications that it is not atm entirely resilient to the upstream bullshit, but I’m curiously eyeballing whether that changes

personally, I’m hoping on servo

This particular bit of news has me so down. :(

I get ya.

❤️

I guess they got tired of begging google for money?

If the stars come right, there may not be a Google to beg for money

gotta love how the screenshot makes him look like a vampire

You know, when Samuel L Jackson decided that the best approach to climate change was to kill billions of poor people rather than ask the rich to give up any privileges in Kingsman it was more blatantly evil but appreciably less dumb than this. Very similar wavelength though.

Aren’t you supposed to try to hide your psychopathic instincts? I wonder if he’s knowingly bullshitting or if he’s truly gotten high on his own supply.