cross-posted from: https://lemm.ee/post/54636974

cross-posted from: https://lemmy.world/post/25156749

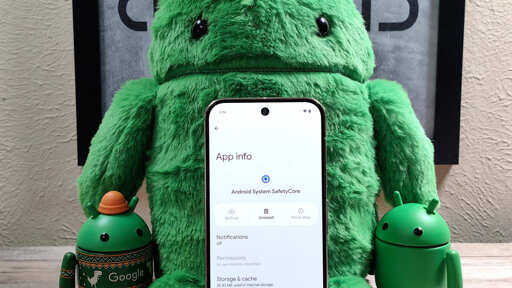

I woke up this morning to find a new little friend on my phone! Android Safety Core.

So what is this great new application that was non-consentually installed on my device with no indicator that it ever was except an alert from Tracker Control.

There isn’t a of information on it, but essentially its an app that “protects” you from obscene images on your phone.

“One of the new features the company announced is called Sensitive Content Warnings. This is designed to give you more control over seeing and sending nude images. When enabled, it blurs images that might contain nudity before you view them and then prompts you with what Google calls a “speed bump” containing “help-finding resources and options, including to view the content.” The feature also kicks in and shows a so-called “speed bump” when you try to send or forward an image that might contain nudity.” - Android Authority

As for the Google play description, it’s very detailed about what your new friend entails.

Very descriptive.

So essentially it blurs any images of nudity that is sent to you or that is seen.

Obviously the app has to see all the images that are sent to you in order to do this. I’m sure this won’t be abused!

The Google reviews on this one sure aren’t happy.

You may want to remove this. It can be uninstalled. However to find it on the store you need to look up the link in your browser. I also provided it here.

Dont we all love google and what it does behind our backs for us?

TL;DR

Google recently non-consentually installed a new “safety” feature on our devices that blur any nude images that are sent to you or seen on your phone. They didn’t include any sort of update alert or anything and simply slipped it onto devices quietly. Here’s a link to the app where you can uninstall if you wish. Of course in order for an app to do this, it needs to see every photo that is ever sent to you. A clear privacy invasion if I say so myself.

LLMs and

openCV are now getting small enough that we should all assume that we have Narcs built into our phones and computers. We’ve discussed this before since most phones are already doing OCR and face checking of our images and stuff. But like the tech has gotten small enough, fast enough that you could easily have something watching for subversiveness at this point.Edit: I just meant CV in general not the open CV library.

Google started sticking coral asics on pixels awhile back. As the algos have gotten more efficient, it would be unsurprising if this sort of thing were not already happening on some devices.

deleted by creator

Yes, sorry.

ASICs are Application Specific Integrated Circuits, or more plainly, a computer chip designed to do a specific job. In this case they are designed to support Google’s AI algorithms, initially they were pitched for image processing specifically.

Google Coral was one of the initial offerings by Google to developers to work on writing code that would use this special chip. They were a pretty good deal if you wanted to do something like run image recognition on your own in home security cameras without uploading the video to anywhere else.

This technology was eventually added to Google’s flagship Android phones, the Pixel series. Things like image recognition and on phone “magic editing” are the result of this specific technology.

I just skimmed this article, but it seems to have some more in depth info on the subject.

Sorry for the wikipedia link but it gives a good summary. https://en.m.wikipedia.org/wiki/Tensor_Processing_Unit

Last I had heard, these chips are in all Pixel phones from the 4 onward.

yeah ive been saying that. im saddened that people are just now starting to really grasp it.

we shouldnt have accepted this encroachment of their authority into the minutiae of our lives.