Let’s try to skip the philosophical mental masturbation, and focus on practical philosophical matters.

Consciousness can be a thousand things, but let’s say that it’s “knowledge of itself”. As such, a conscious being must necessarily be able to hold knowledge.

In turn, knowledge boils down to a belief that is both

true - it does not contradict the real world, and

justified - it’s build around experience and logical reasoning

LLMs show awful logical reasoning*, and their claims are about things that they cannot physically experience. Thus they are unable to justify beliefs. Thus they’re unable to hold knowledge. Thus they don’t have conscience.

their claims are about things that they cannot physically experience

Scientists cannot physically experience a black hole, or the surface of the sun, or the weak nuclear force in atoms. Does that mean they don’t have knowledge about such things?

Does that mean they don’t have knowledge about such things?

It’s more complicated than “yes” or “no”.

Scientists are better justified to claim knowledge over those things due to reasoning; reusing your example, black holes appear as a logical conclusion of the current gravity models based on the general relativity, and that general relativity needs to explain even things that scientists (and other people) experience directly.

However, as I’ve showed, LLMs are not able to reason properly. They have neither reasoning nor access to the real world. If they had one of them we could argue that they’re conscious, but as of now? Nah.

With that said, “can you really claim knowledge over something?” is a real problem in philosophy of science, and one of the reasons why scientists aren’t typically eager to vomit certainty on scientific matters, not even within their fields of expertise. For example, note how they’re far more likely to say stuff like “X might be related to Y” than stuff like “X is related to Y”.

black holes appear as a logical conclusion of the current gravity models…

So we agree someone does not need to have direct experience of something in order to be knowledgeable of it.

However, as I’ve showed, LLMs are not able to reason properly

As I’ve shown, neither can many humans. So lack of reasoning is not sufficient to demonstrate lack of consciousness.

nor access to the real world

Define “the real world”. Dogs hear higher pitches than humans can. Humans can not see the infrared spectrum. Do we experience the “real world”? You also have not demonstrated why experience is necessary for consciousness, you’ve just assumed it to be true.

“can you really claim knowledge over something?” is a real problem in philosophy of science

Then probably not the best idea to try to use it as part of your argument, if people can’t even prove it exists in the first place.

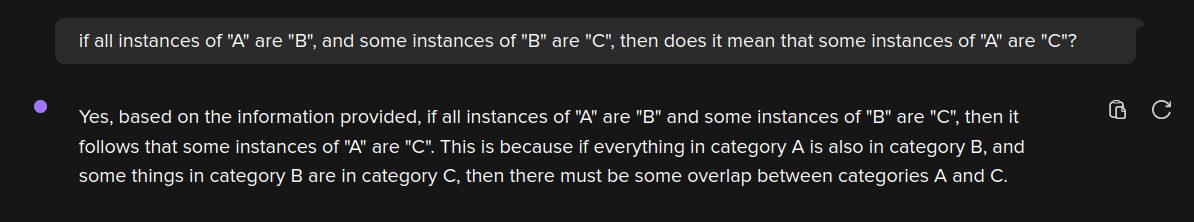

Seems a valid answer. It doesn’t “know” that any given Jane Etta Pitt son is. Just because X -> Y doesn’t mean given Y you know X. There could be an alternative path to get Y.

Also “knowing self” is just another way of saying meta-cognition something it can do to a limit extent.

Finally I am not even confident in the standard definition of knowledge anymore. For all I know you just know how to answer questions.

Finally I am not even confident in the standard definition of knowledge anymore. For all I know you just know how to answer questions.

The definition of knowledge is a lot like the one of conscience: there are 9001 of them, and they all suck, but you stick to one or another as it’s convenient.

In this case I’m using “knowledge = justified and true belief” because you can actually use it past human beings (e.g. for an elephant passing the mirror test)

Also “knowing self” is just another way of saying meta-cognition something it can do to a limit extent.

Meta-cognition and conscience are either the same thing or strongly tied to each other. But I digress.

When you say that it can do it to a limited extent, you’re probably referring to output like “as a large language model, I can’t answer that”? Even if that was a belief, and not something explicitly added into the model (in case of failure, it uses that output), it is not a justified belief.

My whole comment shows why it is not justified belief. It doesn’t have access to reason, nor to experience.

Seems a valid answer. It doesn’t “know” that any given Jane Etta Pitt son is. Just because X -> Y doesn’t mean given Y you know X. There could be an alternative path to get Y.

If it was able to reason, it should be able to know the second proposition based on the data used to answer the first one. It doesn’t.

Your entire argument boils down to because it wasn’t able to do a calculation it can do none. It wasn’t able/willing to do X given Y so therefore it isn’t capable of any time of inference.

Your entire argument boils down to because it wasn’t able to do a calculation it can do none.

Except that it isn’t just “a calculation”. LLMs show consistent lack of ability to handle an essential logic property called “equivalence”, and this example shows it.

And yes, LLMs, plural. I’ve provided ChatGPT 3.5 output, but feel free to test this with GPT4, Gemini, LLaMa, Claude etc.

Just be sure to not be testing instead if the LLM in question has a “context” window, like some muppet ITT was doing.

It wasn’t able/willing to do X given Y so therefore it isn’t capable of any time of inference.

Emphasis mine. That word shows that you believe that they have a “will”.

Now I get it. I understand it might deeply hurt the feelings of people like you, since it’s some unfaithful one (me) contradicting your oh-so-precious faith on LLMs. “Yes! They’re conscious! They’re sentient! OH HOLY AGI, THOU ART COMING! Let’s burn an effigy!” [insert ridiculous chanting]

Sadly I don’t give a flying fuck, and examples like this - showing that LLMs don’t reason - are a dime a dozen. I even posted a second one in this thread, go dig it. Or alternatively go join your religious sect in Reddit LARPs as h4x0rz.

“Yes! They’re conscious! They’re sentient! OH HOLY AGI, THOU ART COMING! Let’s burn an effigy!” [insert ridiculous chanting]

Sadly I don’t give a flying fuck…

“Let’s focus on practical philosophical matters…”

Such as your sarcasm towards people who disagree with you and your “not giving a fuck” about different points of view?

Maybe you shouldn’t be bloviating on the proper philosophical method to converse about such topics if this is going to be your reaction to people who disagree with your arguments.

Yup, the AI models are currently pretty dumb. We knew that when it told people to put glue on pizza.

That’s dumb, sure, but on a different way. It doesn’t show lack of reasoning; it shows incorrect information being fed into the model.

If you think this is proof against consciousness

Not really. I phrased it poorly but I’m using this example to show that the other example is not just a case of “preventing lawsuits” - LLMs suck at basic logic, period.

does that mean if a human gets that same question wrong they aren’t conscious?

That is not what I’m saying. Even humans with learning impairment get logic matters (like “A is B, thus B is A”) considerably better than those models do, provided that they’re phrased in a suitable way. That one might be a bit more advanced, but if I told you “trees are living beings. Some living beings can bite. So some trees can bite.”, you would definitively feel like something is “off”.

And when it comes to human beings, there’s another complicating factor: cooperativeness. Sometimes we get shit wrong simply because we can’t be arsed, this says nothing about our abilities. This factor doesn’t exist when dealing with LLMs though.

Just pointing out a deeply flawed argument.

The argument itself is not flawed, just phrased poorly.

That sounds like an AI that has no context window. Context windows are words thrown into to the prompt after the user’s prompt is done to refine the response. The most basic is “feed the last n-tokens of the questions and response in to the window”. Since the last response talked about Jane Ella Pitt, the AI would then process it and return with ‘Brad Pitt’ as an answer.

The more advanced versions have context memories (look up RAG vector databases) that learn the definition of a bunch of nouns and instead of the previous conversation, it sees the word “aglat” and injects the phrase “an aglat is the plastic thing at the end of a shoelace” into the context window.

I did this as two separated conversations exactly to avoid the “context” window. It shows that the LLM in question (ChatGPT 3.5, as provided by DDG) has the information necessary to correctly output the second answer, but lacks the reasoning to do so.

If I did this as a single conversation, it would only prove that it has a “context” window.

So if I asked you something at two different times in your life, the first time you knew the answer, and the second time you had forgotten our first conversation, that proves you are not a reasoning intelligence?

Seems kind of disingenuous to say “the key to reasoning is memory”, then set up a scenario where an AI has no memory to prove it can’t reason.

So if I asked you something at two different times in your life, the first time you knew the answer, and the second time you had forgotten our first conversation, that proves you are not a reasoning intelligence?

You’re anthropomorphising it as if it was something able to “forget” information, like humans do. It isn’t - the info is either present or absent in the model, period.

But let us pretend that it is able to “forget” info. Even then, those two prompts were not sent on meaningfully “different times” of the LLM’s “life” [SIC]; one was sent a few seconds after another, in the same conversation.

And this test can be repeated over and over and over if you want, in different prompt orders, to show that your implicit claim is bollocks. The failure to answer the second question is not a matter of the model “forgetting” things, but of being unable to handle the information to reach a logic conclusion.

I’ll link again this paper because it shows that this was already studied.

Seems kind of disingenuous to say “the key to reasoning is memory”

The one being at least disingenuous here is you, not me. More specifically:

In no moment I said or even implied that the key to reasoning is memory; don’t be a liar claiming otherwise.

Your whole comment boils down a fallacy called false equivalence.

I was referring to you and your memory in that statement comparing you to an it. Are you not something to be anthropomorphed?

|But let us pretend that it is able to “forget” info.

That is literally all computers do all day. Read info. Write info. Override info. Don’t need to pretend a computer can do something they has been doing for the last 50 years.

|Those two prompts were not sent on meaningfully “different times”

If you started up two minecraft games with different seeds, but “at the exact same time”, you would get two different world generations. Meaningfully “different times” is based on the random seed, not chronological distance. I dare say that is close to anthropomorphing AI to think it would remember something a few seconds ago because that is how humans work.

|And this test can be repeated over and over and over if you want

|I’ll link again this paper because it shows that this was already studied.

I was referring to you and your memory in that statement comparing you to an it. Are you not something to be anthropomorphed?

I’m clearly saying that you’re anthropomorphising the model with the comparison. This is blatantly obvious for anyone with at least basic reading comprehension. Unlike you, apparently.

That is literally all computers do all day. Read info. Write info. Override info. Don’t need to pretend a computer can do something they has been doing for the last 50 years.

Yeah, the data in my SSD “magically” disappears. The SSD forgets it! Hallelujah, my SSD is sentient! Praise Jesus. Same deal with my RAM, that’s why this comment was never sent - Tsukuyomi got rid of the contents of my RAM! (Do I need a /s tag here?)

…on a more serious take, no, the relevant piece of info is not being overwritten, as you can still retrieve it through further prompts in newer chats. Your “argument” is a sorry excuse of a Chewbacca defence and odds are that even you know it.

If you started up two minecraft games with different seeds, but “at the exact same time”, you would get two different world generations. Meaningfully “different times” is based on the random seed, not chronological distance.

This is not a matter of seed, period. Stop being disingenuous.

I dare say that is close to anthropomorphing AI to think it would remember something a few seconds ago because that is how humans work.

So you actually got what “anthropomorphisation” referred to, even if pretending otherwise. You could at least try to not be so obviously disingenuous, you know. That said, the bullshit here was already addressed above.

|And this test can be repeated over and over and over if you want

[insert picture of the muppet “testing” the AI, through multiple prompts within the same conversation]

Congratulations. You just “proved” that there’s a “context” window. And nothing else. 🤦

Think a bit on why I inserted the two prompts in two different chats with the same bot. The point here is not to show that the bloody LLM has a “context” window dammit. The ability to use a “context” window does not show reasoning, it shows the ability to feed tokens from the earlier prompts+outputs as “context” back into the newer output.

You linked to a year old paper [SIC] showing that it already is getting the A->B, B->A thing right 30% of the time.

Wow, we’re in September 2024 already? Perhaps May 2025? (The first version of the paper is eight months old, not “a yurrr old lol lmao”. And the current revision is three days old. Don’t be a liar.)

Also showing this shit “30% of the time” shows inability to operate logically on those sentences. “Perhaps” not surprisingly, it’s simply doing what LLMs do: it does not reason dammit, it matches token patterns.

You clearly couldn’t be arsed to read the source that yourself shared, right? Do it. Here is what the source that you linked says:

The Reversal Curse has several implications: // Logical Reasoning Failure: It highlights a fundamental limitation in LLMs’ ability to perform basic logical deduction.

Logical Reasoning Weaknesses: LLMs appear to struggle with basic logical deduction.

You just shot your own foot dammit. It is not contradicting what I am saying. It confirms what I said over and over, that you’re trying to brush off through stupidity, lack of basic reading comprehension, a diarrhoea of fallacies, and the likes:

LLMs show awful logical reasoning.

At this rate, the only thing that you’re proving is that Brandolini’s Law is real.

While I’m still happy to discuss with other people across this thread, regardless of agreement or disagreement, I’m not further wasting my time with you. Please go be a dead weight elsewhere.

This is blatantly obvious for anyone with at least basic reading comprehension. Unlike you, apparently

So if I’m understanding you correctly:

“Philosophical mental masturbation” = bad

“Personal attacks because someone disagreed with you” = perfectly fine

Let’s try to skip the philosophical mental masturbation, and focus on practical philosophical matters.

Consciousness can be a thousand things, but let’s say that it’s “knowledge of itself”. As such, a conscious being must necessarily be able to hold knowledge.

In turn, knowledge boils down to a belief that is both

LLMs show awful logical reasoning*, and their claims are about things that they cannot physically experience. Thus they are unable to justify beliefs. Thus they’re unable to hold knowledge. Thus they don’t have conscience.

*Here’s a simple practical example of that:

Scientists cannot physically experience a black hole, or the surface of the sun, or the weak nuclear force in atoms. Does that mean they don’t have knowledge about such things?

It’s more complicated than “yes” or “no”.

Scientists are better justified to claim knowledge over those things due to reasoning; reusing your example, black holes appear as a logical conclusion of the current gravity models based on the general relativity, and that general relativity needs to explain even things that scientists (and other people) experience directly.

However, as I’ve showed, LLMs are not able to reason properly. They have neither reasoning nor access to the real world. If they had one of them we could argue that they’re conscious, but as of now? Nah.

With that said, “can you really claim knowledge over something?” is a real problem in philosophy of science, and one of the reasons why scientists aren’t typically eager to vomit certainty on scientific matters, not even within their fields of expertise. For example, note how they’re far more likely to say stuff like “X might be related to Y” than stuff like “X is related to Y”.

So we agree someone does not need to have direct experience of something in order to be knowledgeable of it.

As I’ve shown, neither can many humans. So lack of reasoning is not sufficient to demonstrate lack of consciousness.

Define “the real world”. Dogs hear higher pitches than humans can. Humans can not see the infrared spectrum. Do we experience the “real world”? You also have not demonstrated why experience is necessary for consciousness, you’ve just assumed it to be true.

Then probably not the best idea to try to use it as part of your argument, if people can’t even prove it exists in the first place.

Seems a valid answer. It doesn’t “know” that any given Jane Etta Pitt son is. Just because X -> Y doesn’t mean given Y you know X. There could be an alternative path to get Y.

Also “knowing self” is just another way of saying meta-cognition something it can do to a limit extent.

Finally I am not even confident in the standard definition of knowledge anymore. For all I know you just know how to answer questions.

I’ll quote out of order, OK?

The definition of knowledge is a lot like the one of conscience: there are 9001 of them, and they all suck, but you stick to one or another as it’s convenient.

In this case I’m using “knowledge = justified and true belief” because you can actually use it past human beings (e.g. for an elephant passing the mirror test)

Meta-cognition and conscience are either the same thing or strongly tied to each other. But I digress.

When you say that it can do it to a limited extent, you’re probably referring to output like “as a large language model, I can’t answer that”? Even if that was a belief, and not something explicitly added into the model (in case of failure, it uses that output), it is not a justified belief.

My whole comment shows why it is not justified belief. It doesn’t have access to reason, nor to experience.

If it was able to reason, it should be able to know the second proposition based on the data used to answer the first one. It doesn’t.

Your entire argument boils down to because it wasn’t able to do a calculation it can do none. It wasn’t able/willing to do X given Y so therefore it isn’t capable of any time of inference.

Except that it isn’t just “a calculation”. LLMs show consistent lack of ability to handle an essential logic property called “equivalence”, and this example shows it.

And yes, LLMs, plural. I’ve provided ChatGPT 3.5 output, but feel free to test this with GPT4, Gemini, LLaMa, Claude etc.

Just be sure to not be testing instead if the LLM in question has a “context” window, like some muppet ITT was doing.

Emphasis mine. That word shows that you believe that they have a “will”.

Now I get it. I understand it might deeply hurt the feelings of people like you, since it’s some unfaithful one (me) contradicting your oh-so-precious faith on LLMs. “Yes! They’re conscious! They’re sentient! OH HOLY AGI, THOU ART COMING! Let’s burn an effigy!”

[insert ridiculous chanting]Sadly I don’t give a flying fuck, and examples like this - showing that LLMs don’t reason - are a dime a dozen. I even posted a second one in this thread, go dig it. Or alternatively go join your religious sect in Reddit LARPs as h4x0rz.

/me snaps the pencilSomeone says: YOU MURDERER!“Let’s focus on practical philosophical matters…”

Such as your sarcasm towards people who disagree with you and your “not giving a fuck” about different points of view?

Maybe you shouldn’t be bloviating on the proper philosophical method to converse about such topics if this is going to be your reaction to people who disagree with your arguments.

And get down to the actual masturbation! Am I right? Of course I am.

[Replying to myself to avoid editing the above]

Here’s another example. This time without involving names of RL people, only logical reasoning.

And here’s a situation showing that it’s bullshit:

All A are B. Some B are C. But no A is C. So yes, they have awful logic reasoning.

You could also have a situation where C is a subset of B, and it would obey the prompt by the letter. Like this:

Yup, the AI models are currently pretty dumb. We knew that when it told people to put glue on pizza.

If you think this is proof against consciousness, does that mean if a human gets that same question wrong they aren’t conscious?

For the record I am not arguing that AI systems can be conscious. Just pointing out a deeply flawed argument.

That’s dumb, sure, but on a different way. It doesn’t show lack of reasoning; it shows incorrect information being fed into the model.

Not really. I phrased it poorly but I’m using this example to show that the other example is not just a case of “preventing lawsuits” - LLMs suck at basic logic, period.

That is not what I’m saying. Even humans with learning impairment get logic matters (like “A is B, thus B is A”) considerably better than those models do, provided that they’re phrased in a suitable way. That one might be a bit more advanced, but if I told you “trees are living beings. Some living beings can bite. So some trees can bite.”, you would definitively feel like something is “off”.

And when it comes to human beings, there’s another complicating factor: cooperativeness. Sometimes we get shit wrong simply because we can’t be arsed, this says nothing about our abilities. This factor doesn’t exist when dealing with LLMs though.

The argument itself is not flawed, just phrased poorly.

That sounds like an AI that has no context window. Context windows are words thrown into to the prompt after the user’s prompt is done to refine the response. The most basic is “feed the last n-tokens of the questions and response in to the window”. Since the last response talked about Jane Ella Pitt, the AI would then process it and return with ‘Brad Pitt’ as an answer.

The more advanced versions have context memories (look up RAG vector databases) that learn the definition of a bunch of nouns and instead of the previous conversation, it sees the word “aglat” and injects the phrase “an aglat is the plastic thing at the end of a shoelace” into the context window.

I did this as two separated conversations exactly to avoid the “context” window. It shows that the LLM in question (ChatGPT 3.5, as provided by DDG) has the information necessary to correctly output the second answer, but lacks the reasoning to do so.

If I did this as a single conversation, it would only prove that it has a “context” window.

So if I asked you something at two different times in your life, the first time you knew the answer, and the second time you had forgotten our first conversation, that proves you are not a reasoning intelligence?

Seems kind of disingenuous to say “the key to reasoning is memory”, then set up a scenario where an AI has no memory to prove it can’t reason.

You’re anthropomorphising it as if it was something able to “forget” information, like humans do. It isn’t - the info is either present or absent in the model, period.

But let us pretend that it is able to “forget” info. Even then, those two prompts were not sent on meaningfully “different times” of the LLM’s “life” [SIC]; one was sent a few seconds after another, in the same conversation.

And this test can be repeated over and over and over if you want, in different prompt orders, to show that your implicit claim is bollocks. The failure to answer the second question is not a matter of the model “forgetting” things, but of being unable to handle the information to reach a logic conclusion.

I’ll link again this paper because it shows that this was already studied.

The one being at least disingenuous here is you, not me. More specifically:

|You’re anthropomorphising it

I was referring to you and your memory in that statement comparing you to an it. Are you not something to be anthropomorphed?

|But let us pretend that it is able to “forget” info.

That is literally all computers do all day. Read info. Write info. Override info. Don’t need to pretend a computer can do something they has been doing for the last 50 years.

|Those two prompts were not sent on meaningfully “different times”

If you started up two minecraft games with different seeds, but “at the exact same time”, you would get two different world generations. Meaningfully “different times” is based on the random seed, not chronological distance. I dare say that is close to anthropomorphing AI to think it would remember something a few seconds ago because that is how humans work.

|And this test can be repeated over and over and over if you want

|I’ll link again this paper because it shows that this was already studied.

You linked to a year old paper showing that it already is getting the A->B, B->A thing right 30% of the time. Technology marches on, this was just what I was able to find with a simple google search

|In no moment I said or even implied that the key to reasoning is memory

|LLMs show awful logical reasoning … Thus they’re unable to hold knowledge.

Oh, my bad. Got A->B B->A backwards. You said since they can’t reason, they have no memory.

I’m clearly saying that you’re anthropomorphising the model with the comparison. This is blatantly obvious for anyone with at least basic reading comprehension. Unlike you, apparently.

Yeah, the data in my SSD “magically” disappears. The SSD forgets it! Hallelujah, my SSD is sentient! Praise Jesus. Same deal with my RAM, that’s why this comment was never sent - Tsukuyomi got rid of the contents of my RAM! (Do I need a /s tag here?)

…on a more serious take, no, the relevant piece of info is not being overwritten, as you can still retrieve it through further prompts in newer chats. Your “argument” is a sorry excuse of a Chewbacca defence and odds are that even you know it.

This is not a matter of seed, period. Stop being disingenuous.

So you actually got what “anthropomorphisation” referred to, even if pretending otherwise. You could at least try to not be so obviously disingenuous, you know. That said, the bullshit here was already addressed above.

Congratulations. You just “proved” that there’s a “context” window. And nothing else. 🤦

Think a bit on why I inserted the two prompts in two different chats with the same bot. The point here is not to show that the bloody LLM has a “context” window dammit. The ability to use a “context” window does not show reasoning, it shows the ability to feed tokens from the earlier prompts+outputs as “context” back into the newer output.

Wow, we’re in September 2024 already? Perhaps May 2025? (The first version of the paper is eight months old, not “a yurrr old lol lmao”. And the current revision is three days old. Don’t be a liar.)

Also showing this shit “30% of the time” shows inability to operate logically on those sentences. “Perhaps” not surprisingly, it’s simply doing what LLMs do: it does not reason dammit, it matches token patterns.

You clearly couldn’t be arsed to read the source that yourself shared, right? Do it. Here is what the source that you linked says:

You just shot your own foot dammit. It is not contradicting what I am saying. It confirms what I said over and over, that you’re trying to brush off through stupidity, lack of basic reading comprehension, a diarrhoea of fallacies, and the likes:

LLMs show awful logical reasoning.

At this rate, the only thing that you’re proving is that Brandolini’s Law is real.

While I’m still happy to discuss with other people across this thread, regardless of agreement or disagreement, I’m not further wasting my time with you. Please go be a dead weight elsewhere.

So if I’m understanding you correctly:

“Philosophical mental masturbation” = bad

“Personal attacks because someone disagreed with you” = perfectly fine