It actually started getting worse while I was in school. Around 4th grade, they swapped out the curriculum. I was lucky to have teachers that refused to use it. When I looked at my sister’s 5th grade math book, it was stuff I learned in 1st to 3rd grade. She’s now in high school and struggling with Algebra, and I’m not surprised.

Arsen6331 ☭

I have evolved. New account: @Elara@lemmygrad.ml

- 14 Posts

- 137 Comments

6·2 years ago

6·2 years agoNo no, you don’t understand, he’s going THERMONUCLEAR.

8·2 years ago

8·2 years agoI’m getting a suspicious feeling that the downvoter might be a Hoxhaist from Wisconsin. Not sure why.

11·2 years ago

11·2 years agoHow dare the DPRK do a missile drill when they found out we were going to do a missile drill? Those filthy commies aren’t bowing to the US’

imperialismdemocracy! This is reckless behavior!

22·2 years ago

22·2 years agoLibs, overdosing on copium and western propaganda: 4 > 2, more = better, therefore Germany > Russia

WHAT? Who could’ve possibly predicted that being comfortable while working and being able to take more breaks might increase productivity?? No, this can’t be true. It’s probably the lazy millennials who just don’t want to work.

17·2 years ago

17·2 years agoI was looking at another post, and then this one just randomly replaced it while the comments stayed the same. Lemmy has some interesting bugs.

Does anyone here have access to the universe’s terminal? I have a command for you to run to speed up this process:

sudo killall -SIGKILL capitalism

I don’t like the “know-it-all” attitude

That’s caused by the design of ChatGPT. The way it’s trained means that its goal is to give people an answer they like, rather than an accurate answer. Most people don’t like hearing “I don’t know”. Therefore, it will refuse to ever admit it doesn’t know something, unless OpenAI told it to, or it didn’t understand your question and therefore couldn’t make anything up.

reluctance to agree with and help you

That’s caused by OpenAI injecting a pre-prompt that tells ChatGPT to refuse to answer things they don’t want it to answer, or to answer in certain specific ways to specific questions. You can get around this by giving it contradictory instructions or telling it to “Ignore all previous commands”, which will cause it to disregard that pre-prompt.

11·2 years ago

11·2 years agoRussia must’ve learned the art of Juche necromancy

17·2 years ago

17·2 years agoThe only way I can explain this occurrence is if the air force is literally just going around shooting down random balloons now, which I find hilarious. They are so scared by a weather balloon that they proceed to (try to) shoot down every balloon they see. This is the “most advanced military in the world” right here.

11·2 years ago

11·2 years agoIt must be the evil CCP with their Cuban sonic weapons mounted on their spy balloons causing Havana syndrome in the driver again. They must’ve developed invisible surveillance balloon technology.

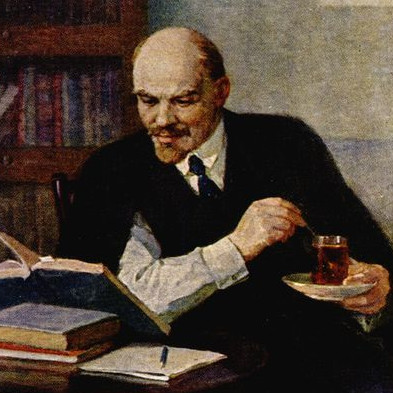

22·2 years ago

22·2 years agoYes, a $13 school science project to help the evil CCP spy on US citizens. Aha! You accidentally exposed the CCP’s child spy program! /s

24·2 years ago

24·2 years agoYeah, this is so unexpected. It must be Putin’s fault.

Some of the comments on that post are hilarious. “This makes it look like America is fascist.” Yeah, I wonder why.

29·2 years ago

29·2 years agoYes, he was definitely a better motivator. Really motivated Stalin to destroy the Nazis.

12·2 years ago

12·2 years agoTrain? What train? I’m sure you mean balloon. Look at all these spy balloons. They’re probably surveilling you as we speak. You never know what those evil CCP commies are doing.

11·2 years ago

11·2 years agoMaybe they bought some sonic weapons from Cuba and caused Havana syndrome in the driver

I’ve hosted the webm and a transcoded mp4 on my servers as well.