cross-posted from: https://lemm.ee/post/54636974

cross-posted from: https://lemmy.world/post/25156749

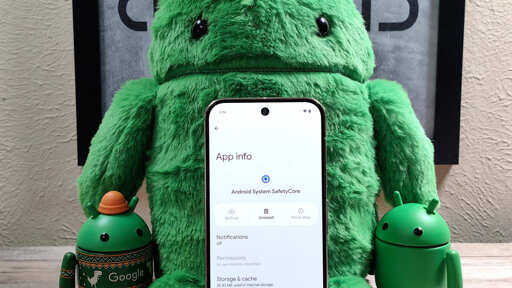

I woke up this morning to find a new little friend on my phone! Android Safety Core.

So what is this great new application that was non-consentually installed on my device with no indicator that it ever was except an alert from Tracker Control.

There isn’t a of information on it, but essentially its an app that “protects” you from obscene images on your phone.

“One of the new features the company announced is called Sensitive Content Warnings. This is designed to give you more control over seeing and sending nude images. When enabled, it blurs images that might contain nudity before you view them and then prompts you with what Google calls a “speed bump” containing “help-finding resources and options, including to view the content.” The feature also kicks in and shows a so-called “speed bump” when you try to send or forward an image that might contain nudity.” - Android Authority

As for the Google play description, it’s very detailed about what your new friend entails.

Very descriptive.

So essentially it blurs any images of nudity that is sent to you or that is seen.

Obviously the app has to see all the images that are sent to you in order to do this. I’m sure this won’t be abused!

The Google reviews on this one sure aren’t happy.

You may want to remove this. It can be uninstalled. However to find it on the store you need to look up the link in your browser. I also provided it here.

Dont we all love google and what it does behind our backs for us?

TL;DR

Google recently non-consentually installed a new “safety” feature on our devices that blur any nude images that are sent to you or seen on your phone. They didn’t include any sort of update alert or anything and simply slipped it onto devices quietly. Here’s a link to the app where you can uninstall if you wish. Of course in order for an app to do this, it needs to see every photo that is ever sent to you. A clear privacy invasion if I say so myself.

iOS has the same feature, called “Sensitive Content Warning”. They tried to make it a thing where it would send stuff to Apple if it detected hashes of known CP I think, which I guess is a bit different because it wasn’t machine learning (or was it, is the confusion intentional?). But they got so much criticism they rolled that back to what it is now. Where it doesn’t sending anything, and it has a toggle in the settings.

I think it’ll ramp up over time like a lot of other things. Using machine learning in more places. Phone operating systems already have so many services that contact remote servers. I’m sure that will only increase, and they’ll ramp up combining the machine learning with phoning home.

I still wonder about the US government’s interaction with tech company software. A quote from a Wikipedia article section on Dual_EC_DRBG in the BSAFE software, which I read recently.

Are there agreements with executives to implement these kinds of weird features that were clearly more work to implement than their apparent utility to end users? Do Google or Apple employees just have their boss say, “we’re prioritizing the nudity detector, get to work!” and that’s that? What kind of narrative do they cook up to make this make sense to the people implementing it? Because if I worked on something like this I’d be like, “why is this necessary to spend time on? Don’t we have a thousand other priorities?”

But who knows, maybe the point of this is just to have an automated nudity blur API that any app can use. The same way Hexbear has a NSFW blur feature but more sophisticated. And instead of using server power to detect it, they just do it on people’s phones so server hosts don’t have to pay for that electricity. idk.

I think it’s developed under the pretence of stopping CSAM from propagating.

For example in the case of Apple, here are their plans when they were rolling out the software and how they justified it..

Here is an article on why they ended up cancelling their plans.

Why would Google go through with this? I’d assume it’s because Google never cared about your privacy and never tried to market themselves off of it.