Wasn’t alpaca.cpp incorporated into llama.cpp half a year ago?

I think you’re missing out on 6 months of AI development if you’re still using it. And the last 6 months have been a lot of progress.

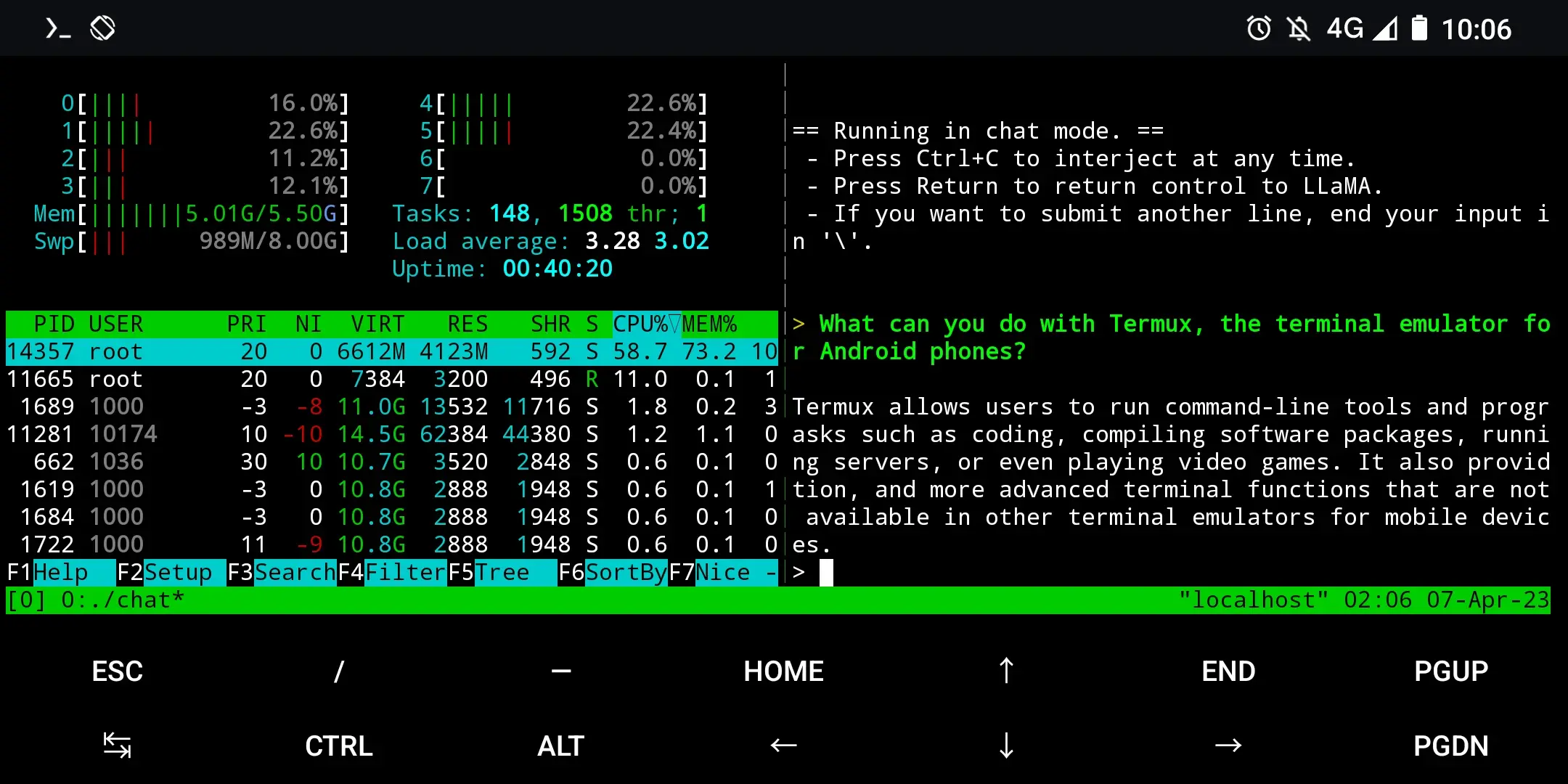

Use llama.cpp and a Llama 2 based model.

This is the only guide I found for android, on linux I just discovered ollama which is great

Yeah. Make sure your phone has 8GB of RAM or more. I think you can get it to work with 6GB. But less won’t work with anything useful. As I said, llama.cpp is another piece of software that has instructions for Termux on Android. You need to scroll down a bit to find the instructions. It also works with Linux, Mac and Windows. But as your phone probably doesn’t meet the specs (mine doesn’t either) you don’t need to try.

ollama seems good.

If you’re interested in large language models, have a look at the following communities

Make sure to read the “Getting started” and other guides for software recommendations etc. You’ll find them in the sidebar (About).

Thanks for the susuggestions. Is there any way to run it with 4GB ram? Maybe with smaller models of 2B instead of 7B?

Yes. Technically it’s perfectly doable.

Usefulness… Depends on what you’re trying to do with it. Maybe you should download a small model on your computer first and see what it’s like. There are small models like phi-1 for Python coding. But if you’re trying to talk to it or ask it questions, you’ll be disappointed in models below 7B. They do gramatically correct sentences. But last time I tried, they’re not really coherent and struggle to understand simple input. It’s more like autocomplete. (YMMV)

I currently like the models Mistral-7B-OpenOrca and MythoMax-L2-13B. I think they’re good for general purpose applications (besides programming). If you have some specific use-case in mind, there are lots of other models out there that might be better for this or that.

And I use them with KoboldCpp (or with Oobabooga’s Text generation web UI and the llamacpp backend) on my computer. If you have a graphics card with enough VRAM, you probably want something else. But I think these are some basic tools for people without a high-end computer. Oobabooga includes everything so you can also use it with your Nvidia GPU.

Oobabooga was very slow, I tried h2ogpt and was good, I could pass it docs too for custom training but still slow. Lord of the language models is the easiest to setup, nice interface but still a bit slow. Ollama is the fastest I tried so far, I couldn’t make it’s web based ui work yet, hope to have success. And then I need a way to pass it custom docs

I found sherpa but it requires too High specs, it crashes on my phone

https://play.google.com/store/apps/details?id=com.biprep.sherpa