- cross-posted to:

- technology@lemmy.ml

- cross-posted to:

- technology@lemmy.ml

The echoes of Y2K resonate in today’s AI landscape as executives flock to embrace the promise of cost reduction through outsourcing to language models.

However, history is poised to repeat itself with a similar outcome of chaos and disillusionment. The misguided belief that language models can replace the human workforce will yield hilarious yet unfortunate results.

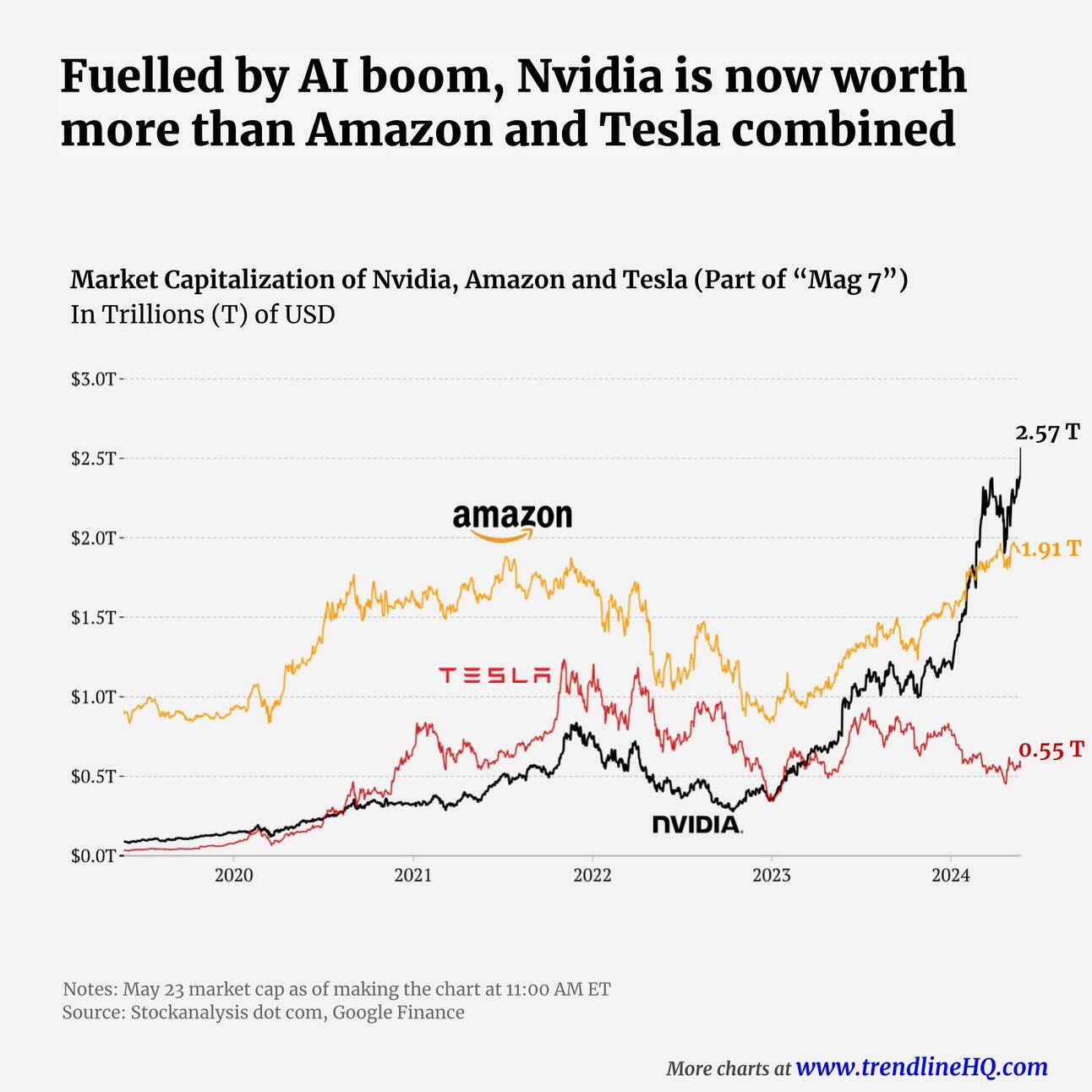

I wonder if there is a potential anti-trust kind of situation brewing for Nvidia. I know American anti-trust is a complete joke btw. But seeing how Nvidia is pulling in a >50% profit margin with no alternative in sight in a critical field like AI, you have to wonder about it. Even though American anti-trust is a complete farce.

AMD WAS funding a CUDA translation later but they stopped. So Nvidia has no competetion now at all.

The flipside could be that this boom is fueled entirely by LLM and LLM-adjacent hype. Their GPU data center renting seems to be their most profitable business. For most intents and purposes this could be a dead end as the tech will probably not be able to have the wide-spectrum of capabilities that the techpopes seem to purport it will. Non-LLM AI could be safe for now from Nvidia dependency but I don’t know what developments are like in that field.

Yeah, that’s a good question, they have a monopoly on the market effectively. I also suspect that the whole AI thing is a bubble. There is genuinely useful stuff you can do with stuff like language models, but it’s hugely overhyped right now. That said, a new hot thing might show up once the AI hype starts dying down too. It seems like we always find use for more processing power.

these LLMs seem an excellent fit for expert systems as long as you train them on relevant data instead of all the garbage of the collective internet

For sure, there are a lot of legitimate use cases for this stuff. We’re actually starting to see them being put to good use in China. One story I saw a little while back was about monitoring rail infrastructure and identifying potential faults so they can be repaired proactively. This is an excellent use case because it leverages the ability of the model to correlate a whole bunch of data which is something that humans find challenging to do, but also keeps it out of proactive decision making. This kind of approach of having LLMs work along side humans seems like the most promising approach in the near future.

However, history is poised to repeat itself with a similar outcome of chaos and disillusionment. The misguided belief that language models can replace the human workforce will yield hilarious yet unfortunate results.

Even if AI can’t be much better than what has already been demonstrated, which I don’t think is the case but let’s consider it, there are already quite a few jobs which can be at least partially automated and that can already change the world by so much, even if only by having permanent unemployment at above 10-20% for every country, or by the bourgeoisie accepting to reduce worked hours to only a few so the system doesn’t collapse.

I agree that machine learning will keep improving, and that there are jobs already being replaced. I’m just pointing out that it’s currently very far from being able to do what people are claiming. In particular, it’s pretty decent at tasks like content generation, or searching through information to pull up relevant details. So, jobs in these areas will be impacted. However, it’s simply a non starter for any work where there’s a specific correct result required. Stuff like self driving cars is a good example of this. Main problem is that the models are just trained on a bunch of text and images, and then they make inferences based on that. They don’t have any understanding in a human sense of what they’re doing because they don’t have a model of the world that we have.

In my opinion, really interesting AI developments will start happening once we start seeing models trained using embodiment, where they start encoding rules of the physical world internally through experience the way children do, and then they’re taught language within that context.

With regards to capitalism, it’s definitely going to be interesting to see how the system grapples with this. Ultimately, one of the fundamental contradictions is that companies need consumers with disposable income, and if you start doing mass automation of jobs, then you’re also eliminating your consumer demographic.